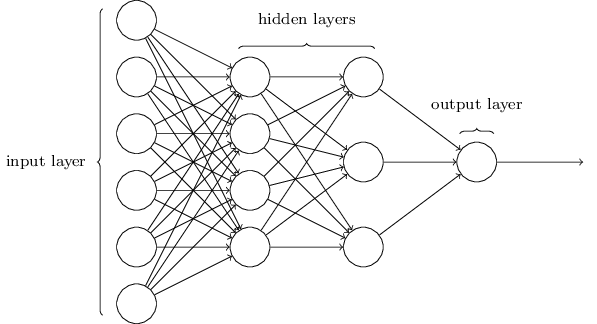

Simple Neural Network aka Multilayered perceptron

The goal of this exercise is to build your own neural network from scratch and train it to recognise a series of handwritten digits. See The Perceptron for more informations about the perceptron concept

import random

import numpy as np

print(np.version.version)

import scipy.special

import math

import time

1.13.3

Reading and formating data

def read_number(file, size):

return int.from_bytes(file.read(size), byteorder='big', signed=False)

def get_label_data(filename, number_label, offset):

file = open(filename, 'rb')

magix_number = (0x00000801).to_bytes(4, byteorder='big')

read_value = file.read(4)

if (read_value != magix_number):

print("This isn't a label file!")

return 0

number_of_items = read_number(file, 4)

data = []

if (offset >= number_of_items):

return [number_of_items, 0]

if (number_label + offset > number_of_items):

number_label = number_of_items - offset

header_size = 8

file.seek(header_size + offset * number_label)

for i in range(number_label):

data.append(read_number(file, 1))

return [number_of_items, data]

def normalise_number(number, minimum, maximum):

return (number - minimum) / (maximum - minimum)

def get_image_data(filename, number_images, offset):

file = open(filename, 'rb')

magix_number = (0x00000803).to_bytes(4, byteorder='big')

read_value = file.read(4)

if (read_value != magix_number):

print("This isn't an image file!")

return 0

number_of_items = read_number(file, 4)

number_of_rows = read_number(file, 4)

number_of_columns = read_number(file, 4)

if (offset >= number_of_items):

return [number_of_items, number_of_rows, number_of_columns, 0]

if (number_images + offset > number_of_items):

number_images = number_of_items - offset

data = []

minimum = 0.0

maximum = 255.0

image_size = number_of_rows * number_of_columns

header_size = 16

file.seek(header_size + image_size * offset)

for i in range(number_images):

pixels = []

for j in range(image_size):

pixels.append(normalise_number(read_number(file, 1), minimum, maximum))

data.append(pixels)

return [number_of_items, number_of_rows, number_of_columns, data]

Build neural network

class NeuralNetwork:

def __init__(self, inputnodes, hiddennodes, numberhiddenlayer, outputnodes, learning_rate):

self.inodes = inputnodes

self.hnodes = hiddennodes

self.nlayer = numberhiddenlayer

self.onodes = outputnodes

self.lr = learning_rate

# activation function is the sigmoid function

self.activation_function = lambda x: scipy.special.expit(x)

#input to first hidden weight

self.hlayers = []

#create hidden layer

for i in range(self.nlayer + 1):

if (i == 0):

self.hlayers.append(np.random.normal(0.0, pow(self.inodes, -0.5), (self.hnodes, self.inodes)))

print("wih", self.inodes, "->", self.hnodes)

elif (i + 1 == self.nlayer + 1):

self.hlayers.append(np.random.normal(0.0, pow(self.hnodes, -0.5), (self.onodes, self.hnodes)))

print("who", self.hnodes, "->", self.onodes)

else:

self.hlayers.append(np.random.normal(0.0, pow(self.hnodes, -0.5), (self.hnodes, self.hnodes)))

print("hidden", self.hnodes)

self.last = len(self.hlayers) - 1

def train(self, inputs_list, targets_list):

inputs = np.array(inputs_list, ndmin=2).T

targets = np.array(targets_list, ndmin=2).T

hidden_outputs = []

hidden_inputs = []

size = len(self.hlayers)

for i in range(size):

if (i == 0):

hidden_inputs.append(np.dot(self.hlayers[i], inputs))

else:

hidden_inputs.append(np.dot(self.hlayers[i], hidden_outputs[-1]))

hidden_outputs.append(self.activation_function(hidden_inputs[-1]))

hidden_errors = None

output_errors = targets - hidden_outputs[size -1]

for i in range(size -1, -1, -1):

if (i == size - 1):

# print("yo")

hidden_errors = np.dot(self.hlayers[i].T, output_errors)

self.hlayers[i] += self.lr * np.dot((output_errors * hidden_outputs[i] * (1.0 - hidden_outputs[i])), np.transpose(hidden_outputs[i - 1]))

elif (i != 0):

output_errors = targets - hidden_outputs[i]

# print("nop")

# hidden layer error is the output_errors, split by weights, recombined at hidden nodes

hidden_errors = np.dot(self.hlayers[i].T, output_errors)

self.hlayers[i] += self.lr * np.dot((output_errors * hidden_outputs[i] * (1.0 - hidden_outputs[i])), np.transpose(hidden_outputs[i - 1]))

else:

# print("yay")

self.hlayers[i] += self.lr * np.dot((hidden_errors * hidden_outputs[i] * (1.0 - hidden_outputs[i])), np.transpose(inputs))

targets = hidden_errors

def query(self, inputs_list):

inputs = np.array(inputs_list, ndmin=2).T

hidden_outputs = []

hidden_inputs = []

size = len(self.hlayers)

for i in range(size):

if (i == 0):

hidden_inputs.append(np.dot(self.hlayers[i], inputs))

else:

hidden_inputs.append(np.dot(self.hlayers[i], hidden_outputs[-1]))

hidden_outputs.append(self.activation_function(hidden_inputs[-1]))

return hidden_outputs[-1]

Create Brain class

class Brain:

def __init__(self, nn):

self.nn = nn

self.total_iteration = 0

def learn(self, data, label_data, number_iteration):

for i in range(number_iteration):

self.total_iteration += 1

for j in range(len(data)):

targets = np.zeros(nn.onodes) + 0.01

targets[label_data[j]] = 0.99

nn.train(data[j], targets)

def recognise(self, data, label_data):

score = 0

for i in range(len(data)):

targets = np.zeros(nn.onodes) + 0.01

targets[label_data[i]] = 0.99

res = nn.query(data[i])

# print(res)

label = np.argmax(res)

# print(label)

if (label == label_data[i]):

score += 1

return score / float(len(data))

Execute training

if __name__ == "__main__":

image_data = get_image_data("train-images.idx3-ubyte", 60000, 0)

label_data = get_label_data("train-labels.idx1-ubyte", 60000, 0)

nn = NeuralNetwork(image_data[1] * image_data[2], 200, 2, 10, 0.1)

brain = Brain(nn)

# print(label_data)

# print(image_data)

iteration = 10

image_test_data = get_image_data("t10k-images.idx3-ubyte", 10000, 0)

label_test_data = get_label_data("t10k-labels.idx1-ubyte", 10000, 0)

print("After ", 0, " iteration :")

print("Success : ", brain.recognise(image_test_data[3], label_test_data[1]) * 100, "%")

start_time = time.time()

for i in range(iteration):

brain.learn(image_data[3], label_data[1], 1)

print("After ", i + 1, " iteration :")

print("Success : ", brain.recognise(image_test_data[3], label_test_data[1]) * 100, "%")

print("Execution time : ", time.time() - start_time, " secondes")

wih 784 -> 200

hidden 200

who 200 -> 10

After 0 iteration :

Success : 9.82 %

After 1 iteration :

Success : 91.24 %

After 2 iteration :

Success : 92.24 %

After 3 iteration :

Success : 92.84 %

After 4 iteration :

Success : 93.5 %

After 5 iteration :

Success : 90.86999999999999 %

After 6 iteration :

Success : 93.41000000000001 %

After 7 iteration :

Success : 93.82000000000001 %

After 8 iteration :

Success : 94.32000000000001 %

After 9 iteration :

Success : 93.69 %

After 10 iteration :

Success : 94.17 %

Execution time : 736.5199599266052 secondes

Links

Youtube

- The Coding Train - 10.4: Neural Networks: Multilayer Perceptron Part 1

- The Coding Train - 10.4: Neural Networks: Multilayer Perceptron Part 2

-

[3Blue1Brown - But what *is* a Neural Network? Deep learning, chapter 1](https://www.youtube.com/watch?v=aircAruvnKk) - 3Blue1Brown - Gradient descent, how neural networks learn | Deep learning, chapter 2